Digital Cinema Dynamic Range

Friday, February 22, 2008 at 12:06AM

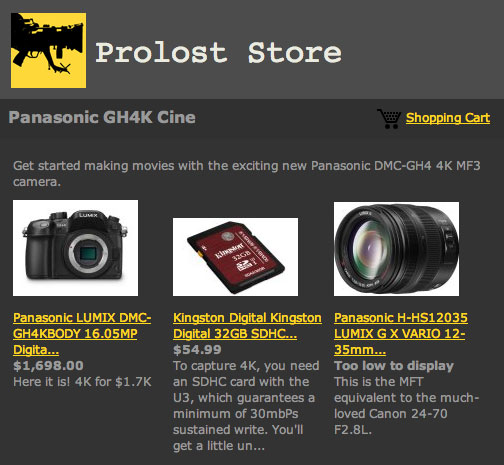

Friday, February 22, 2008 at 12:06AM This post opens a little window into my current thoughts about digital cinema, dynamic range, and some recent and ongoing testing of the RED One camera. I thought some of you might be interested in the process I go through when pondering things like this, rather than just some dry results.

Camera tests are a bit funny to me. You test a 3rd grader to give him a grade. You test the waters to see if you want to jump in. And you test house paint colors by pinning some swatches to the wall and trying to get a feel for what you like. I often feel that camera tests are more like that last example than anything else. But there is a kind of testing that makes a ton of sense—the testing you do when you've already 99% decided what camera you want to use, and now you want to figure out how you want to use it to produce a specific result. That's what writer/director Moses Ma did for his film The Ethical Slut. He and DP Paul Nordin knew they wanted to shoot with the RED One, so they staged a test based on a short scene from the film.

To make things interesting, the shot in an airy studio with big windows that they knew they couldn't control. And as you can see, those windows are overexposed, and clipped.

We all knew this was going to cause problems, but the exact nature of these problems has got me thinking about some of my favorite digital cinema questions: What exactly is dynamic range? And what constitutes a film-like image?

And of course a very concrete question: What should we do the next time we're shooting RED and there's a window in the room?

To help with my head-scratching about all this, I turned to After Effects, as I tend to do. I created a virtual scene with common exposure targets in HDR. Here's what it looks like:

And here are the targets annotated:

(Click on the image to enlarge it)

The gray card is your standard 18% reflectance, and it like all the cards in the scene are rendered without any shading so that they return pure values. The image, being a gamma 1.0 HDR, contains floating-point values that map 1:1 with the diffuse reflectance of the subjects, so the 18% gray card maps to pixel values of 0.18.

The black card is not pure black, but rather a more physically realistic 1% reflectance.

The pure white card is a bit of a hypothetical (nothing reflects 100% of the light that hits it), but it and the either cards are more about exposure targets than simulating a physical object. So we have spots in the frame that represent "white" and one, two, three and four stops over "white." We also have something that doesn't really exist in nature: pure black.

The "skin tones" in the scene are designed so that they both land on the "skin vector" of a vectorscope and rate as 70% luminance in Rec709. So between the skin target and the 18% gray card this scene is designed to be easy-as-pie to expose according to traditional rules-of-thumb.

Video rules of thumb, that is.

Here's the same scene underexposed by four stops so that you can see the relationships of all the exposure targets. This also makes it clear that each card has a patch of "detail" to help distinguish whether the truly falls within exposure.

All these images have been converted to sRGB space for viewing on your gamma 2.2 display of course. If you'd like to download the scene as a 16-bit float EXR, you can do so here.

So now we have our hypothetical scene, it's time to shoot it with a hypothetical camera. A Rec709 camera uses an encoding curve of roughly gamma 1.9, but its images are designed to be displayed at gamma 2.2, which is very close to sRGB, the viewing assumption for all images on this page.

So let's take a look at what happens when we shoot our scene with a Rec709 camera. Following one of many rules-of-thumb on the subject, we'll expose the gray card at 45% luminance and skin tones at 70%. Here are the results:

The same shot on a Waveform Monitor:

As you can see, the gray card is at 45% IRE and the skin tones are at 70%. But you can also see that the other cards are all pegged to the ceiling—even the 100% card. Exposing a pure Rec709 image using the common standards doesn't even leave you enough headroom for something "white."

If I expose the scene down by half a stop you can see the white card come into range in both the image and the scope:

So this is what happened on our RED shoot. We exposed according to video rules using the only image our RED One was capable of displaying, and we wound up with very little room for highlights.

You might ask what the big deal is here. "Sure, the windows (or cards) are blown out, but that's the picture we're making! The windows are bright and I want them to look white." That would be a perfectly reasonable position to take—unless you planned on transferring to film.

See, color negative film has a huge shoulder, the part of the famous s-curve at the top where it slopes gently off. "White" is nowhere near overexposed on film—it's right at the beginning of this shoulder, right after the straightline portion of the curve.

Watch what happens when I take my Rec709 image and convert it to Cineon Log using a gamma of 2.2:

Here I used the default settings of 10-bit black = 95, white = 685. As you can see, the white card and all its overexposed companions are maxed out at 685, nowhere near the maximum 10-bit value of 1023.

Here's what that image would look like printed to Kodak 2383 print film:

And now you see the problem. The clipped highlights map not to white, but to a pinkish gray that's about 80% of the available brightness.

You might suggest to change the white-point mappings so that those clipped whites get placed closer to 1023, but in doing so you brighten up the entire scene:

...but now our carefully metered gray card and person are way overexposed.

And this is exactly what happened with our test footage. We converted it to log using Red Alert and graded in under a 2383 preview LUT, and the windows appeared as pink, flat fields rather than sunny, overexposed sunlight:

Now the truth is, most Rec709 cameras save a little room above pure signal white for overexposure, and RED One is no exception. Using Red Alert I was able to extract a bit more out of those windows—but just a bit.

What traditional video cameras tend to do with that headroom is build a little rolloff into the Rec709 curve. Like a tiny version of film's mighty shoulder, this rolloff allows a more graceful clip into white whil still keeping things like gray cards on their waveform targets. Here's a hypothetical Rec709 image with a bit of a rolloff:

As you can see, gray is still at 45%, but now "white" maps to about 94%, and even the one-stop-over card is not fully blown out. This more closely approximates the behavior of most HD video cameras, and it would seem to be a good thing. What a clever person can do when transfering this kind of video to film is undo that shoulder curve and pull that extra bit of highlight latitude back up into the brighter Cineon registers without affecting the midtones, resulting in less flattened whites and as-expected mids.

But this concept, while critical for transferring old-school video to film, has nothing to do with RED, or any camera that shoots raw. Because how that rolloff works inside the camera is by underexposing, and then creating a linear image that is then bent into this funky bastardization of the Rec709 curve. So the camera that made the rolloff image above would first capture this image internally:

(note that the image has been converted to Rec709 for viewing)

...and then pushes up the mids to create this:

This is important when your camera records compressed, limited-bit-depth images. On Red it wouldn't help at all, because the gamma curve is merely a preview. But that doesn't mean we can't learn from the process.

The camera gave itself extra room for highlights by under exposing. A 1 1/4 stop underexposure gave the camera enough highlight latitude to create a nice soft shoulder.

And so we come to the conclusion that was, of course, blatantly obvious at the beginning: To get more highlight latitude, we'll need to underexpose. Obvious indeed, but what's maybe not so obvious is that without the rolloff tricks that we're accustomed to from our video cameras to fall back on, we can really screw ourselves by failing to underexpose RED One.

But how much should we underexpose? For one suggestion of how much, let's look at another digital cinema camera, the Panavision Genesis. The Genesis shoots to a color space known as "Panalog." Rather than using a gamma curve, it uses a logarithmic curve similar enough to the Cineon log curve that one can actually recreate the Panalog transfer function using a standard Cineon lin/log tool.

Here is our scene in Panalog, exposed per Panvasion's guidelines:

Note that while we still get to give our gray card a decent exposure, it's not up at 45%. And we have no trouble holding onto detail in the two-stops-over card. Lo and behold, this Panalog image transfers to film very nicely indeed:

(boosted 2 stops in printer lights)

Nothing magical here, although I do think there is real brilliance behind the Panalog format, since it can both be legible on a video display and transfer to film elegantly. But all that's happening is that the Genesis is recording a linear image on its chip that spans scene values from 0% to 600%. The log encoding is only mandated by the bit-depth of the recording medium. Armed with that knowledge, we can create a Rec709 version of the 0.0–6.0 linear image that the Genesis records:

And here we have the key. If we want the RED One to match the Genesis's latitude, this must be our exposure target in Rec709 (which, at the time of this writing, is the only monitoring option for the RED One, although that should change soon). We must ignore RED One's suggestions to expose it like a video camera.

Put 18% gray at 12.8% IRE.

Put white folk at 23.1% IRE.

"White" will land at 39.2% IRE, and you'll hold values all the way up to (and slightly beyond) 600%.

No problem. Except for two things:

This dark image won't be any fun to look at. Not for directors and not for DPs. Something more like Panalog would be better, and something to which a 3D LUT could be applied would be ideal.

But more importantly, RED One, like many cameras, has compression and noise, two things that show up like crazy in underexposed images. By standardizing on an underexposed image, we are putting the most important value ranges down in the mud where they just might get stepped on.

But hey, that's what Panavision's doing! They just happened to plan their entire camera and imaging pipeline around what they perceived as a digital cinema mandate: hold onto enough highlight values that your images look terrific on film.

So how will the RED One handle being underexposed by 2.580 stops across the board? We'll find out next week when Paul shoots his next round of tests!

Oh, and one last thing, just to bring this all home. We've seen how a Rec709 camera can't even hold 100% scene illumination without some underexposure, usually found in the form of a rolloff curve that allows it to capture maybe up to about 150%. We've seen how the Genesis cleverly holds onto 600% scene illumination. But what of film? Well, there are a lot of different film stocks out there, but as a rule the Cineon log model, which makes no accounting for toe or shoulder, maps Cineon log 1023 to 1,352%.

That's right, 1,352%. Here's what that looks like:

Only the 4-stops-over patch is clipped, and just barely (and it wouldn't be if the shoulder was modeled). Shoot that out to film and you get this:

A full range of exposure, rich skin tones, and subtle detail in bright highlights.

Just a little reminder of what we're all shooting for here.

Reader Comments (23)

Good Lord, Stu. That was long, but that was great stuff nonetheless.

Wow. That was epic and awesome. Thanks for sharing your findings with the RED.

Stu,

I read your post. It was very informative, interesting, and a very carefully thought-out experiment.

I may not have gotten the full gist of it, but correct me if I am wrong, that you are arguing that we should not go from Rec 709 Log to film (via a lut), and might have used a straight linear mapping to film (via linear->log) as what the Panavision panalog is doing.

I concur with you as I have argued in the favor of a similar approach on the RedUser forum before, the only difference is that instead of Panavision model of linear capture -> log, I was more in the favor of log capture (via non-linear CMOS) -> linear -- just as what happens on Film negative. This approach might introduce a little more noise because of addition of a few more components before ADC, but I think such models are worth exploring.

You are welcome to have a look at that thread at the following link:

[URL="http://www.reduser.net/forum/showthread.php?t=8493"]http://www.reduser.net/forum/showthread.php?t=8493[/URL]

Thanks again for all your efforts and great images.

-- Joofa --

Amazing. Very usefull information. Things are coming together in my head a little bit better now.

Stu, Could this same logic be appplied to Consumer DLSR shooting in RAW mode?

I have found in my own tests with a Canon Rebel XT that I needed to underexpose almost any photo by -1.5 stops to hold more dyn range.

thx for the cool info!

epic indeed...theres alot of info there that you may never find anywhere else and that is incredibly appreciated.

Congratulations Stu,

Great work!

@vfxpro: I think the point is if you're capturing using a device that has a 5 stop dynamic range, but you want to transfer to a medium that has a 8 stop dynamic range you have to decide which 3 stops of that medium you're not going to use.

If you expose for mid gray, then you're highlights will clip 1.5 stops down from the top.

If you under expose by 1.5 stops, you'll get the highlights in the right place, but there's 3 stops of shadow detail gone.

If you over expose by 1.5 stop, you'll get all that shadow detail, but you're highlights will clip 3 stops down.

So each is suitable for different situations. Trouble is, to do anything clever you need to know the characteristics of the medium you're going to transfer to.

What surprises me is the statement that Red One only has the dynamic range of a Rec709 camera. I doubt that's true.

...or is it just that the only way of measuring the exposure was through Rec709 equipment? (monitors, scopes)

VFXPRO:

It does carry over.

A little background: I've been a professional digital photographer for almost 10 years starting back with the big Kodak DCS line. In 2000 when I got the Nikon D1, that is when I learned that if I underexposed by .66 - 1.33 stops, that I retained much more image detail and most importantly, color detail. (I step in thirds.)

6 different camera models later, today I shoot on the 1DsII and still do the same.

Wow Stu. This is not only your best post ever but one of the best pieces on digital video cinematography I've ever read. Instant Classic.

Stu your discussion is really interesting and clearly you have put a lot of work into this - but I need to ask if some of base assumptions of workflow are not wrong. Actually that remark is too negative - your piece is really interesting regardless but maybe there is more to it. As you know, I respect your opinion a lot -so I offer this as creative technical discussion not as argument.

Let me explain:

1. It appears - and I could be wrong - that much of this discussion is based on the REC 709 output from the RED.

This is a valid thing to look at in some cases, but also one could argue it is very much like testing film by looking at the video split from the film camera. This is not the cameras output. Unlike a Genesis or other video camera, the HD output is just a low resolution fast and dirty video monitoring tool. This is true in many ways - quality of downres, demosaic etc - this output is a realtime ASIC version of the actual RAW sensor data - it is the 'LCD display on the back of the stills camera which shoots RAW files'. It is not the best place to judge the camera output.

2. You did say you went into RED Alert and recovered a little - but as you spoke so little about the RED workflow - I'd love to understand if your 'testing' was based on the RED685 log files from the RAW or Log 685 from the REC 709 - as the later never happens.

3. The camera shoot real Linear response curves and these are captured in the RAW data which is then encoded into the .r3d files. Rec709 is one view on this data but not the data's format nor the base to work on - unless you want to do a quick and easy HD/SD post workflow.

4. So what should we be using and how is it different?

Firstly the .r3d is the sensor data and NOT that data after rgb-> YUV , matrixing, debayering, whitebalance and ISO adjustments. So this means that while I look at REC709 - with clipping, there is more data captured than this displays. The easiest way to see this is to open a .r3d in red alert, with clipping. the histogram will continue to the right and hit the end of the histogram. Now change to a red log file format such as RED 685 - and you will see a spike at the 685 - indicating more data at the clipping point.

Now and this is the key aspect I did not see you cover. CHANGE the ISO. Drop the ISO/ASA from say 320 to 250 , and you will see clipped data returned to the highlights.

5. What just happened? Well, we asked Red Alert to take the correctly exposed material but effectively transfer a compressed grade, with more fall off into the highlights, but we did not underexpose the original to get this. Nor did we need to look at dark images on set. We simply transfered the data more effectively for film. But this is not a 10 bit operation - at this stage RED Alert is dealing with 16 bit data (with some floating point operations). Much like a film scanner - only without capturing film grain :-). And like film - the DOP knows that there is "more there than what is showing on the montior" - you you can flick the ISo lower on set to check if you want. ISO on set is non-destructive.

6. Does this dramatically increase the dynamic range of RED.

No. RED does not have the dynamic range of film nor say an F23 (although this may change in the future). But it does have a very different approach to post - one not based on video standards (Rec709 is nothing more than a video HD standard). By testing it via the rec709 path - if that is what you did, ... does not embrace this data approach.

7. But perhaps you would think the curve into the high end is still wrong, well there is still the option of creating your own Log function and exporting using a custom Log export.

But to the point of dj's comment post. Red is exactly linear sensor to LOG. (and all sensors are linear in response at sensor level).

But Stu I may be wrong - you may have done all of this - I just did not get that from your discussion.

I welcome your reply, as always thanks for raising the level of the discussion.

Mike Seymour

fxguide.com

fxphd.com

Hey Mike, thanks for the detailed comment.

On point number one, you are absolutely correct. I am specifically concerned with the problem of how to shoot with a "digital cinema" camera that only offers a video gamma output. All of my musings are based on the issue of how to tae this down-and-dirty preview and produce predictable film-like results.

On point two, no testing was based on a Rec709 conversion that was then converted to log. All my testing was done using variations on the PD Log 685 conversion in RED Alert.

Point three is one I understand quite well and made quite an effort to explain in my post, when I discussed the inapplicability of notions like video knee and other highlight rolloff processing.

RED One shoots a linear image and has a hard clip at a somewhat difficult to predict place just north of what you see in the live Rec709 output. That's if you rate the camera at near its native sensitivity. As David Mullen pointed out, if you rate the camera faster, the Rec709 preview represents even less of the available range.

But to point 5, I have to say that while RED One has some headroom, it's not "like film." Film has 6+ stops over what you'd think of as white, and RED One most certainly does not unless you rate it six stops slow, in which case I imagine the noise will be a big problem. But I'll be testing that rather than assuming.

It's dangerous to have this headroom be an un-preview-able unknown quantity and to suggest that one should just expose RED One like film. What I am trying to figure out is how to use the evolving preview options of this amazing new camera to capture film-like images that can be graded in a traditional DI pipeline.

Genesis does this well with Panalog, and my hopes are high that future previewing options will include RED Log and other similar options, with their own appropriate exposure aides.

But even when that happens, the sensor won't change (short of firmware miracles, which aren't impossible)—so the degree of underexposure necessary to hold a panalog's worth of stop will be the same then as now. And that is an alarming -2.6 stops.

Mike, I think Stu is trying to approach the issue from a practical, generic viewpoint.

A lot of what he says is already practised in various parts of image industries–including digital stills users, and in the VFX industry to workaround linear imagery workflows that need to be transferred to film.

Your extensive post seems to only confuse the point.

Thanks for this very thorough post.

Some of the DSLR raw comparisons have me scratching my head.

Everything I've learned suggests overexposing to maximize signal-to-noise in linear capture.

(See more at http://www.luminous-landscape.com/tutorials/expose-right.shtml" REL="nofollow">Luminous Landscape, http://www.adobe.com/digitalimag/pdfs/linear_gamma.pdf" REL="nofollow">Bruce Fraser, Google "expose to the right".)

What you're talking about here is specific to a film-out workflow. I'm guessing this is what you meant by "we are putting the most important value ranges down in the mud where they just might get stepped on."

But I might be wrong...

Hey Stu,

Great post. One thing that I'm a little confused about is your capture format for the camera. What are you capturing to? I'm not that familiar with the RED.

My concern (unfounded or tested of course) would be that by underexposing to increase the captured dynamic range, you are going to end up with obvious visible quantization artifacts once you select the final dynamic range. This quantization could either occur at the sensor level, or in the conversion to a non-RAW format (if you are capturing RAW).

It sounds to me that you're capturing (or converting) from raw to 10 bit panalog to avoid quantization errors in the file format. Which just leaves how good the Genesis sensor operates at lower light levels in regards to noise and quantization. So I guess depending on your tests to come, you may need to trade off capturing all 600% against the noise and quantization error of the chip.

--c

Hi Elliot, you're right, you want to expose "to the right" to maximize signal to noise ratio.

The chief issues is how different curves visually redistribute the dynamic range of an inherently linear sensor.

For instance, if you have a CMOS sensor with 11 f-stops of linear dynamic range, that means the total amount of dynamic range you have to play with is only 11 f-stops . . . no more, no less. How many f-stops of over-exposure you get will depend on where you place "middle-grey" along that range. For instance, if you place middle grey at a point that is 5 f-stops down from the white-clip point, you have 5 f-stops of headroom, and 6 f-stops of shadow. If you move the middle grey point to 3 f-stops under the white-clip point, you now only have 3 f-stops of over-exposure to clip and 8 f-stops of shadow. If you place the the middle grey value at 6 f-stops below middle grey, you will get 6 f-stops of headroom and 5 f-stops of shadow . . . in all cases the limiting factor in your headroom is the noise floor. The closer you move the middle grey point to the noise floor, the higher the gains you will be applying to the noise floor, and the more visible noise you will get in the image.

Now, let's apply this to a 12-bit image so we can see what's happening with these different gamma curves.

In a REC709 image curve, the .18 linear value coincides with a 12-bit value of 1675, or basically .40. So basically a middle-grey card exposed at 18% grey would look "proper-ish" on typical screen. Now, in a photometrically linear image, for every f-stop increase, you need to double the linear value. Taking that .18 and doubling it for +1 f-stop gives us .36 (REC709 .59 or 2146 in 12-bit). Doubling it again for +2 f-stops gives us a linear value of .72 (REC709 .84 or 3477 12-bit). Doubling it again for +3 f-stops and . . . . oops, we've clipped . . . looks like you can only get +2.5 f-stops before you clip.

So, if you take a "18%" Middle-grey card, and map it to a .18 linear value, which would make it look "normal" in REC709, when applied to my 11 f-stop example, you've actually placed middle grey only 2.5 f-stops below white-clip.

Now when exposing in LOG space, what happens is mapping a "middle-grey" card to .18 linear code values won't look right . . . it would look way too bright. For instance, .18 in a LOG base 90 curve translates to a value of .61, or 2498, which would be very bright . . . to get down to the .40 value that would look "normal", you would need to under-expose down to .07, or more than another f-stop (one f-stop down from .18 would be .09), which now in-turn gives you more headroom. Or going back to the original example, the LOG curve allows that middle-grey value to push back down the dynamic range scale of the sensor (say at -4.5 f-stops from white-clip), while keeping the middle grey and the overall exposure of the scene looking "normal".

So, to sum up, REC709 will increase the SNR, but it does so by making you push the middle-grey point up on the dynamic range of a sensor. While you might think 18% middle-grey is in the "middle" of the dynamic range of the sensor, it's not, unless your sensor was only good for 5-6 f-stops. A logarithmic scale on the other hand allows you to expose for "middle-grey" at values below linear .18, but have them still map to "normal-looking" grey values after the logarithmic curve conversion. As noted in our example above, you could expose a middle grey card at linear .07 and get the resulting value at the same ending value on-screen.

Damn! That was tight Jason! Concise, succinct... perfect. I love it!

Thanks for sharing the knowledge Stu.

Great stuff Stu. Love hearing your thoughts on RED and the whole process in general.

Great follow up comment as well Jason.

This is so rarely written up and boiled down into useable info.

Well, the confusion is raging on this marvelous new camera... Just watched the StudioDaily RED webinar, where Andrew Young of DuArt held forth on the 'correct' exposure practices with the RED. Here are some notes I took...(paraphrased)

"Digital sensors are really analog devices. The Mysterium sensor digitizes 12-bits, with 4096 possible levels of tonality. Even our eyes apply compression to focus on the range important to us. Sensors are linear devices, capture range and fall off. Turns light into numbers. Brightest value is 4095 divided by half, (one stop) level is described 2048 (half of 4096) therefore, half of all pixels on the sensor are dedicated to describing brightest level.

For production, one must gamma-correct this image, moving the data distribution to a more normal level. Low-end exposure can generate noise. Warning, if you underexpose by one stop, you’re throwing away half the information!"

Ansel Adams' axiom "Expose for the shadows, Develop for the highlights" is therefore upended for the REDCODE RAW Rule for Mysterium "Expose for the highlights, process for the shadows".

I've never seen a RED camera. I have no 'dog' in this race, other than I hope to understand the capabilities and limitations of this technology. Thoughts?

This issue is not directly dependent upon the Rec-709 vs. Log space. It is dependent upon how a particular manufacturer (Red One) chose to define the white point for Rec 709 space, and what they did with values above that white point.

It has been pointed out that Red One selected the white point for Rec 709 just shy of the extreme (0.4 EV), and hence, any values in that 0.4 EV range are "crushed" by Rec 709 conversion process by default.

Again, this issue should not be confused with a generic Rec 709 vs. Log Space. It is how the manufacture treated the white point. They could have easily reversed the situation and by altering Rec 709 white point given more highlight information in Rec 709 monitoring, and crushed whites in the Log space. Would we have said then that Log is worse than Rec 709?

Great post. My tests last fall with Red concluded that when using a waveform to judge exposure you'll get the most dynamic range at ISO 320 if you place middle grey at 30% and Caucasian skin tone at 43%. That being said I've since discovered that if you have a low contrast scene overexpose by as much as 2 stops to get a much cleaner image. Just make sure those highlights don't get near clipping.

Stu,

A most excellent post and the most relevant to what I do every day. You have effectively provided the technical detail for that which I have been doing instinctively - leaving some room at the top of my linear exposure so highlights can roll-off more organically when s-curves are applied for digital finishes (as opposed to film-outs.)

Thanks again,

Michael Morlan

Stu,

in relation to your comment:

So how will the RED One handle being underexposed by 2.580 stops across the board? We'll find out next week when Paul shoots his next round of tests!

what was the conclusion of the shoot?