The Film Industry is Broken

Tuesday, September 4, 2007 at 5:59PM

Tuesday, September 4, 2007 at 5:59PM The film industry has a tremendous need right now for an open standard for communicating color grading information—a Universal Color Metadata format.

There are those who are attempting to standardize a "CDL" (Color Decision List) format, but it would communicate only one primary color correction. There are those trying to standardize 3D LUT formats, but LUTs cannot communicate masked corrections that are the stock in trade of colorists everywhere. There are those tackling color management, but that's a different problem entirely.

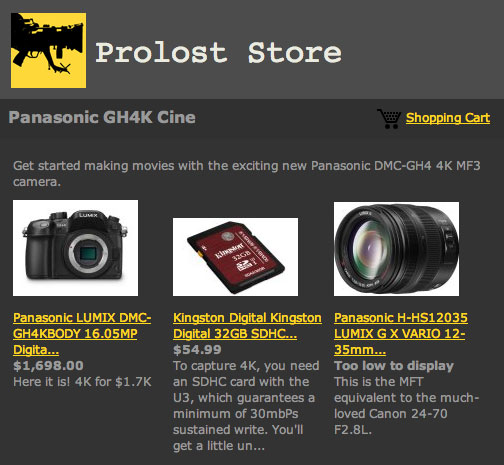

Look at the core color grading features of Autodesk Lustre, Assimilate Scratch, Apple Color, and just about any other color grading system. You'll see that they are nearly identical:

• Primary color correction using lift, gamma, gain, and saturation

• RGB curves

• Hue/Sat/Lum curves

• Some number of layered "secondary" corrections that can be masked using simple shapes, spines, and/or an HSL key

Every movie, TV show, and commercial you've ever seen has been corrected with those simple controls (often even fewer, since the popular Da Vinci systems do not offer spline masks). It's safe to say that the industry has decided that this configuration of color control is ideal for the task at hand. While each manufacturer's system has its nuances, unique features, and UI, they all agree on a basic toolset.

And yet there is no standardized way of communicating color grades between these systems.

This sucks, and we need someone to step in and make it not suck. Autodesk, Apple, Assimilate, Iridas; this means you. One of you needs to step up and publish an open standard for communicating and executing the type of color correction that is now standard on all motion media. This standard needs to come from an industry leader, someone with some weight in the industry and a healthy install base. And the others need to support this standard fully.

Currently the film industry is working in a quite stupid way when it comes to the color grading process, especially with regards to visual effects. An effects artist creates a shot, possibly with some rough idea of what color correction the director has in mind for a scene, but often with none. Then the shot is filmed out and approved. Only once it is approved is it then sent to the DI facility, where a colorist proceeds to grade it, possibly making it look entirely unlike anything the effects artist ever imagined.

Certainly it is the effects artist's job to deliver a robust shot that can withstand a variety of color corrections, but working blind like this doesn't benefit anyone. The artist may labor to create a subtle effect in the shadows only to have the final shot get crushed into such a contrasty look that the entire shadow range is now black.

But imagine if early DI work on the sequence had begun sometime during the effects work on this shot. As the DI progresses, a tiny little file containing the color grade for this shot could be published by the DI house. The effects artist would update to the latest grade and instantly see how the shot might look. As work-in-progress versions of the shot are sent from the effects house to the production for review, they would be reviewed under this current color correction. As the colorist responded to the new shots, the updated grade information would be re-published an immediately available to all parties.

Result? The effects artist is no longer working blind. The director and studio get to approve shots as they will actually look in the movie rather than in a vacuum. Everyone gets their work done faster and the results look better. All of this informed by a direct line of communication between the person who originally created the images (the cinematographer) and the person who masters them (the colorist).

Oh man, it would be so great.

I've worked on movies where the DI so radically altered the look of our effects work that I wound up flying to the DI house days before our deadline to scribble down notes about which aspects of which shots should be tweaked to survive the aggressive new look. I've worked on movies that have been graded three times—once as dailies were transfered for the edit, once in HD for a temp screening, and again for the final DI. Please trust me when I say that the current situation is broken. We need an industry leader to step in and save us from our own stupidity.

And this industry leader should do so with their kimono open wide. Opening up a standard will involve giving away some of your secret sauce. Maybe there's something about your system that you think is special, or proprietary. Some order of operations that you feel gives you an advantage. Well, you could "advantage" yourself right into obscurity if your competition beats you to the punch and creates an open standard that everyone else adopts. The company that creates the standard that gets adopted will have a huge commercial advantage. You can learn about the business advantages of "radical transparency" from much more qualified people than myself.

Of course, there will be challenges. Although each grading system has nearly identical features, they probably all implement them differently. It's not obvious how much information should be bundled with a grading metadata file. Should an input LUT be included? A preview LUT? Should transformations be included? Animated parameters? It will take some effort to figure all that out.

But the company that does it will have built the better mousetrap, and they'd better be prepared for the film industry to beat a path to their door. So who's it going to be?

Until you step up, we will keep trudging along, making movies incorrectly and growing prematurely gray because of color.

Reader Comments (36)

Stu, it's like you've been reading my mind, and probably others.

Great post.

Joe C.

What I'd like to see is a merger between the http://cinespace.risingsunresearch.com/" REL="nofollow">cineSpace and the http://iridas.com/" REL="nofollow">iridas .look format.

Animated masks and tracking info usually is delivered as separate files but I agree that having all that in one open format would dramatically reduce DI costs.

Not unsypathetic, but, um, good luck with that :-)

Tim

Would it be possible to have a colorist bake a "Color map" that can be applied by any system. Rather than have your Fusion color corrector try to match it, you just had a node that applied a map. Not a LUT because that's across the whole image. It would be kind of like a Normal map in 3D but for grading it will take into account all power windows, secondaries etc. Basically every pixel will have assigned math it needs to do no matter what image is under it. So the end result is there. So the original DI colorist still creates the maps with his color session but his map can be applied in any system. Just thinking out loud.

yes, that would be great.

And now is certainly the time to do so. Otherwise we end up with another qwerty. Actually lots of them. EBCDIC anybody?

The complete implementation might be daunting. Maybe defined implementation levels as part of the file format can help? From simple means to complex ones: Software could tell you that it did find color info that it is unable to interpret (like masks) but could show you the image 'as good as it can do'.

In other words this would be so great that starting small while being expandable might be worth trying.

Aiming for 'everything included' would probably make the task so complex that it might never get done. Or, even worse, it would become the job of a committee.

Bigger purchasers (Ascent Media, Technicolor: I am looking at you) should insist that the millions of dollars that they spend on coloring equipment don't leave them with work that gets recorded in odd and obscure file formats.

For all sorts of reasons actually.

Good stuff. Subscribed to your feed.

I watch the struggle with this process frequently, even within the same company.

I think everyone's used to working around it, but I often wonder what the client thinks as they watch a sophisticated spot (I'm thinking commercials at the moment) slowly come together during the job. I hate having to turn part of my brain off while looking at an intermediate stage of a project.

None of this seems like an insurmountable obstacle. Based around XML, the syntactic groundwork would already exist and code to parse XML would be simplified by existing libraries to interpret it. Several open specs for XML-based spline representations already exist, so cobbling together a collection of representations and filling in whatever's missing wouldn't be too complicated. Embed anything bitmappy in an associated EXR file unless we just want to write all that out in hex into the XML file (I'd rather not, though this message has an advantage of simplicity if not filesize and human-legibility of the XML).

It might be helpful to include language for describing alternate colorspaces, both known types such as 6-color wide-gamut projection methods and as-yet-unknown methods.

Great post - but how to make it happen?

One idea - find the connected power users for different systems; the artists and filmmakers who know a given manufactuer well. (For instance, if Stu and Trish and Chris Meyer talk to Adobe about implementing the standard in AE, it has more effect than say...ummm....me.)

Find the power VIP users, get them on board, then divide and conquer with an united front...whatever the hell that means.

wow... I hope it all comes together.... especially with all the RED footage coming...

This seems like a 2 part issue to me. The first being the actual creative grading of the footage and the second being the LUTs applied to the image to approximate the look on film on a monitor or whatever. I think the chances of the former finding some sort of standard have a much greater chance of coming to fruition than the latter. The first is a selection of simple to describe colour transforms which, as has be noted in another comment, could easily be encapsulated in XML. the same cannot be said for the complex colour cubes used to do film look-ups. Every VFX company I've worked for has had its own colour pipeline internally and has invested a lot of time and money into building their own colour cubes which is all well and good until you have to share shots between facilities. So it's not just the colour grading tool manufacturers who need to be a little more hippyish in their sharing of the love, the VFX houses are also going to need to learn to play nicely with each other if this is to work. Suffice to say, I am not holding my breath for this.

Hi Stu,

I think you might find the integration between CineForm and IRIDAS quite interesting as it allows non-destructive color-management using LUT's at the codec decoder level. In other words what you are describing with the ability to change the "look" of a file at the OS-level as a new grade is made is available right now. By doing the color-management of the "look" of the file a the codec level, you're able to control how files are decoded in any application, and one simple metadata change can trickle across a number of applications seamlessly, everywhere from the editing application, effects app, etc.

Right now the 3D LUT color-management is only enabled for the RAW file format version of CineForm, but it's coming to the 4:2:2 and 4:4:4:4 versions of the codec as well.

Also one more thing and that is the IRIDAS .look format includes in readable XML all the settings, input/output LUTs, color-corrections, and the settings that were used to create the 3D LUT portion of the .look file. So if someone else wanted to reverse engineer it and translate it to their own format, it can be done.

I would say the IRIDAS XML is as "open" as the FCP XML format, although it's a lot simpler in it's overall structure (and doesn't change as much).

Great Post Stu,

I've pretty much have been through that same DI process as you mentioned and i am in one right now on this new feature im working on. Like you said most often then not, the VFX artists match to the RAW plate with a standard LUT, only to go see the final film with a radical aggressive new look. I think the film industry needs a common format, much like the print, edit, and sound industry have. So far i'm pretty impressed with what Adobe has done and implemented the ICC format across there products. But more work needs to be done.

Why not start with revisiting the edit decision list first. The good ol' CMX3600 is a Pinto with its wheels falling off. Once we have something that can handle more that 2 tracks of video and cuts and dissolves, then let's tackle color, vector shapes, &c.

But open standards for our industry is absolutely something worth pursuing. It might look something like a film industry equivalent to the W3C consortium. Tools could then be evaluated in terms of their standards-compliance, same as web browsers are today.

Individual companies would undoubtedly still try to push through their own special sauce while also (more or less) supporting open standards -- but we would be light years ahead of where we are now.

But I wouldn't model it on the UN as your logo implies, unless you want 95% of this new body's activity to consist of passing anti-Microsoft resolutions instead of getting actual work done.

What do you think of the solution by Color Symmetry?

http://www.colorsymmetry.com/

What do you think of the solution from Color Symmetry?

The ASC, the Academy and many of the manufacturers you mentioned are working on file formats, open standards, and some of the tools you are seeking. It's possible now to put together pipelines that have the type of workflow you propose. The projects you describe are the victims of producers who don't know or understand how to manage the image data they are producing. BTW "broken" implies that it once worked. :-)

But why are they wearing a kimono?

And yes, this is just blindingly obvious.

If you look at EDL, XML, and AAF (and OMF)... of those three, EDL is the most basic and in widespread use.

AAF supports more features for transferring edit information, and IMO that is a weakness.

A- It takes more resources to support.

B- There isn't much to gain from conveying edit information beyond the basic cut, dissolve, and speed change. Any effects you do is sometimes only a rough anyways, so it will be re-created in the online. And there are so many methods of doing particular effects that it will be difficult to translate every effect.

In color grading, there are different ways of doing splines (Lustre has many many options and parameters for affecting splines) and for things like diffusion/blur (e.g. the blur in Color looks different than other platforms). So I doubt these items will ever be conveyed correctly.

Perhaps it is best to keep it simple. A 3-D LUT will convey per-pixel corrections. To convey spatial corrections, the color grading system could output .jpgs with comments (perhaps one before any spatial corrections, and one after). The person on the other end would be able to visually see how much diffusion, where the major windows are, etc.

Noise reduction (and edge enhancement) perhaps cannot be conveyed that well via a JPEG. But NR would be extremely difficult to convey in some open format anyways, since there are too many ways of doing NR (especially proprietary motion compensated approaches).

That's my thinking anyways.

The whole dream of having the industry standardizing the post process is... just a dream.

IBM tried 25 years ago to use off-the-shelf parts in order to build IBM's first PC. The end result was that they lost control of the IBM PC de facto standard and ultimately they were entirely forced out of the PC business (by selling their PC division to Lenovo).

If any market leader will truly standardize the process of post in an open manner, the end result will be similar to opening all doors and windows to the competition. Whoever is faster and cheaper will prevail.

The market leaders will fight nail and tooth against any attempt of deepopen standards in the post industry.

Aren't you really just wanting better communication between departments? And wouldn't a simpler answer be to deliver more work in float? or have a really on to it DI assistant who emails stills daily?

Because try as you might, no standard can compensate for a director or dp saying at the last minute "Hey let's try something different!"

Cheers,

Toby

kinda hard to control this beyond color space and basic color displacements like LUTs, gamma, gain, offset etc. i mean even basic mask shapes are done many, many ways between apps and the conversion of, for example a bsplien to a hermite curve is wicked different. i think a goal would be to have the people driving these color grading systems have a better understanding of the process as a whole and not just take footage at face value and push the heck out of it. float helps, but a lot of people ive come across dont quite get the non-clamp workflow when they are used to working within a 8/10/12/16 bit format. to me, its working smart with the tools.

Hi Stu,

Very interesting post. I have been thinking around the issue for a bit and maybe we should meet and discuss our points of view.

Let me introduce myself, I am the Sr. Industry Manager for Film & TV at Autodesk. I come from the industry and i am focusing on exactly these kind of issues.

I am currently traveling, but if you contact me offline, maybe we can meet next time i am in town.

Thanks

S

If you make it a simple standard, you end up with something like Grass Valley EDL's. Standard and pretty much useless for anything other than roughing, which is what jpgs stills do for color correction now. By eliminating variables, you limit the possibilities of what could be done. You can't sell an update of Luster if it can't do something new, and if that something new can't be recreated in the standard, then you lose the point of standards.

For masking, that's going to be bitmaps, lots of mono channel bitmaps. If you can live with that, then that's a solved problem.

After that, you would need a single voxel array for each color correction. That array would contain the after color values. The array itself would be the source values. Problem there would be size. Each correction would be terabytes in size. 2^32 on each side for float. And each cell would have to contain a triplet of floats. We're talking terabytes for each correction, which would be each mask, each keyframe, each change.

Just too dang much data. Could you approximate it with a smaller voxel array? Sure, but the interpolation would be the special sauce that would make the systems close, but not exactly the same. But even then, would you tolerate even as little as a 100MB per correction?

There's simply no way to store the possible corrections any other way. Every color vector would be possible, so you've got to have some sort of format for recording vectors.

Why color values only? There'd need to be agreement on image processing functions but it seems like most of that's been worked out over the years.

Chad... in my opinion, simple works. For online editing: EDLs work. AAF usually doesn't (even though it is more powerful). EDLs are mostly sufficient and I've seen them used the most.

The main problems with the EDL workflow are mostly unrelated to EDLs. Decks that aren't frame accurate, editors who don't know what they are doing (e.g. universities don't teach you EDLs).

As far as "voxel" arrays, it sounds like you are describing 3-D LUTs. A 65x65x65x3 LUT should be sufficient IMO... as would 33x33x33x3. I believe most vendors are using LUTs of that size.

2- As far as secondaries go, it would potentially be possible to represent them as lift, gamma, gain + a 3-D LUT with a compressed image of the mask alpha/transparency.

The files would not need to be that big.

We have found 64 or 65 point cubes are an absolute minimum. If you are going to further color-correct the image (so i.e., the 3D LUT is not actually the end-result, but is getting you to a base-point you will be further correcting from), we have found 128-point cubes are necessary to prevent banding in smooth gradients. In binary, a 32-bit floating point 64-point cube is around 6MB, and a 128-point cube is around 24MB.

I worked on a night shot for Superman Returns where the camera rises up the letters of the Daily Planet and the values for the shot to be seen properly was from .001 to .025 in the darkest areas. The shot only looked good in two places. My monitor and the sweat box (barely), when it was approved.

Any other time I saw it outside SPI it was completely washed out.

I second your sentiments Stu!

Speaking as a programmer, it seems to me that some format of XML would likely work best for this.

Off the top of my head I'd say a node for each frame, that node can contain the settings (-255 to +255) for each of the possible primary adjustment settings, additionally it could contain secondary layered information - coordinated of mask shapes, etc.

The bonus would be that as this system would have an entry for every frame, animation of masks and settings would be built in.

Lastly, XML also probably the easiest format to use, as it has format guidelines that have been well established already, so support would just be a matter of parsing the file and applying the settings.

I agree with Toby, it is a problem of communication between "technicals" vs. "creativity". No matter the country, if the DP, the Director, the client, the agency, even the girlfriend of somebody, if some one in some core point raices the hand and say: "hey, wouldn't it be nice to try to warm the hole scene?" that's all you need for a total collapse of a great planned process.

I guess that besides of the communications between platforms, the human communication and knowledge in each step of a project it's a most.

Hmm thinking about this a little more.

Suppose everyone agrees that the basic approach would use a 3-D LUT for the primary grade.

To get secondaries on top of that wouldn't really be that difficult. Have the color grading app export a 3-D LUT representing the secondary CC, and a still image of the mask/matte.

In all compositing programs, they have functions to mask/matte effects. So if there was a 3-D LUT node (or effect), it's pretty trivial to setup the node tree (or layers in AE) to implement the secondaries.

This approach doesn't support keyframing, but that should be ok.

Glenn, I think that is a terrific solution. It simplifies the problem down to something that can be accomplished easily and does not require the software companies to give away any deep dark secrets or implement identical algorithms. Very nice!

...and the mattes could be downsampled and compressed for space, and could be animated if needed. Animating 3D LUTs isn't so fun but worst case scenarios you're still talking about a color correction for a complex shot compressing down to a manageable size, like a few megs.

The more I think about it, the more I like it!

Hollywood, CA – November 15th 2007 - On October 29th 2007 da Vinci Systems and Gamma and Density Co. took part in the first demonstration of the ASC CDL ... / ...The ASC CDL has been developed by members and contributors of the ASC Technology Committee to provide an industry 'standard' for cross-platform exchange of primary RGB color correction data...

----

More on: http://3cp.gammadensity.com/

Finally someone said it without holding back. Great post Stu.

Just like anything in our industry we don't have enough voices or an accepted organisation that can make this happen for us and it sux ;(

Beeing forced to match grading by looking at quicktimes and trying to figure out grading changes for different sequences is a retarded and a redundant process, especially if the temp grade has already been established anyway.

To me this way of working makes me grind my teeth on every new show when talking to the DP and/or director. They spend hours in the pre DI suite and we can't even get some form of standard color transport.

As visual artists we should be adding to this, not making it more diluted.

I have hopes hopes for the ASC-CDL, but sadly only the Da Vinci "resolve" can export this and the Da Vinci "2K+" cannot, regardless of the fact that most pre DI is done on the latter.

Anyway, cheers ;P

That's why Workflowers exists, it's a big part of our job !

http://www.workflowers.net