using the eLin color model in floating point apps

Friday, February 25, 2005 at 9:27PM

Friday, February 25, 2005 at 9:27PM

Much of my writing on the benefits of working linear is tied to the eLin documentation. It occurred to me that it might be helpful to describe these advantages in more general terms, and to provide equivalences of the eLin color pipeline for two popular floating point compositing apps.

First, let's get some terms straight. A lot of people use the term linear to describe images that look correct on their displays without any color correction. In visual effects circles we sometimes hear about converting Cineon images from log space to “linear” so they “look right.”

When I use the term linear, I am talking about something else. I am talking about a linear measure of light values. I freely intermingle terms like photometrically linear, radiometrically linear, scene-referred values, gamma 1.0, and just plain old linear when describing the color space in which pixel values equate to light intensities.

If you were to display such an image on a standard computer monitor without any correction, it would look very dark. The best way to visualize this is to think about the “middle gray” card you bought when you took your first photography class. It appears to be a value midway between black and white, both to our eyes and in our correctly-exposed shots, and yet it is described as being 18% gray.

We’d want images of this card to appear at or near 50% on our display. But in scene-referred values, an object that is 18% reflective should have pixel values of 18%, or 0.180 on a scale of 0–1.

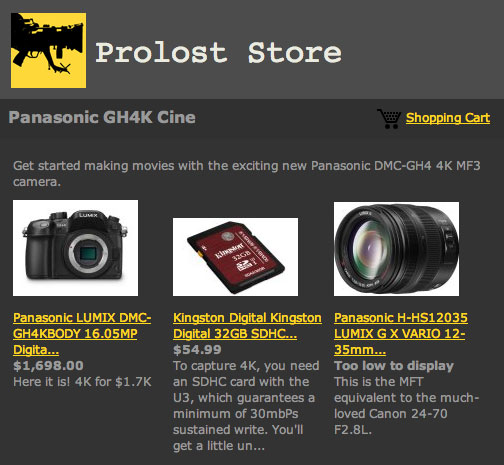

Virtual Graycard Comparotron2000™:

Linear image with no LUT (card = 0.18, or 18%)

Image with a 2.2 LUT applied (card = 0.46, or 46%)

If your digital camera didn't introduce a gamma 2.2 (or thereabouts) characteristic into the JPEGs it shoots, they'd look like the linear example above. The images where the card “looks right” are variously described as perceptually encoded, gamma encoded, or they may even be identified as gamma 2.2 encoded, or having a gamma 2.2 characteristic curve. A specific variant of gamma 2.2 goes by the name sRGB. In an attempt to create a catchy (and catch-all) term, the eLin documentation refers to these color space collectively as vid, since NTSC video has a gamma of 2.2 (kindasorta), and since these images “look right” on a video monitor.

Many of the image processing tools we use behave differently when performed at different gammas. If you gamma an image dark, blur it, and gamma it back up (inverse of gamma = 1/gamma), you get a different result than if you simply blur the image.

When you convert an image to linear space, your subsequent image processing operations better match real-world physical properties of light. If you are accustomed to processing perceptually encoded images, you will probably find that switching to g1.0 processing will make your familiar effects look more organic (with a few notable exceptions to be covered in a later article).

All this talk can be boiled down to a simple description:

Processing and blending in linear simulates light values interacting. To make a gamma 2.2 image linear, apply a gamma of 1/2.2 (0.4545). View linear images through a gamma 2.2 display correction (LUT). Convert images back to 2.2 (vid) for integer storage and display, or leave them linear for float storage (such as EXR).

Earlier we talked about Cineon scans. Converting a Cineon image from its native “log” space to true linear is very simple — just use a transfer gamma of 1.0. In Fusion, this means setting the Conversion Gamma slider to 1.0 (the default). In Shake, this means a DGamma of 1.7 (the slider's value is internally multiplied by 1/1.7, so 1.7 = 1.0. The ProLost definition of “high end” is “needlessly confusing.”).

There's a slight hiccup here, because the results of linearizing a Cineon to g1.0 and then viewing the results through a g2.2 correction are not the same as the results of using a Cineon transfer gamma of 2.2. This means that if you want to round-trip vid and Cineon elements in your comp, you have to choose your definition of “linear” — Cineon’s or video’s.

Welcome to the ConfusoDome™.

In eLin we differentiate between these two working modes with checkboxes for Cineon Emulation.

In the Fusion and Shake workflows, I've differentiated between these two methods with separate sets of Macros.

The straight gamma definition of linear:

eLin_vid2lin

eLin_log2lin

eLin_lin2vid

eLin_lin2log

And the Cineon flavor:

eLin_cin_vid2lin

eLin_cin_log2lin

eLin_cin_lin2vid

eLin_cin_lin2log

eLin_cin_lin2vid.lut

Either one of these systems will round-trip Cineon and vid images safely. It’s just a question of which definition of linear you like better. The straight gamma version is more aggressive, yielding brighter highlights from a Cineon file and with a more precipitous slope near black. The straight gamma version also provides easier back-and-forth with certain 3D apps that support gamma correction but not LUTs (see an example).

Download the Fusion Macros (8kB .rar file)

Download the Shake Macros (32kB .zip file)

Armed with these Macros, you can follow the simple workflow outlined above. Convert vid and log sources to linear, comp away, and convert the results to vid or log for output. In the straight gamma method, you simply use a display correction of gamma 2.2 — easily done in both apps. In the Cineon method you will need to use the supplied LUT file, or create a Shake viewscript from the cin_lin2vid Macro.

This is exactly the color model of eLin, except that eLin packs this whole enchilada into the 15+1bpc color space of After Effects 6.5.

Any questions?

And now the caveats: I don't claim to be an expert at any of this. Use at your own risk. Offer void in Utah. No smoking in the bathroom. The Shake workflow really requires a view script for the cin_lin2vid LUT — the .lut file I've included is in Fusion format. But I don't know how to use Shake. Nor do I know how to use Fusion. I hope some brave soul will post a comment about how he/she made the Shake workflow actually work. Federal law prohibits removal of this tag except by the consumer.

Reader Comments (11)

eh...almost one year old...dont know if anyone will see it...

I like this article very much, cause it kinda explains all this gamma/linear/nonlinear/yadayada stuff in a way that it describes what the workflow with it should look like...

anyway, have some questions about how to make and treat inputs which I make...

I am 3d artist, working in 3d max, and recently discovered some tutorials and disscusions on this subject, but it isnt still clear for me...in a short..it is said: turn gamma correction in max to 2.2, just for prewieing purpose...render it...( image looks much darker than prewied, as ur example above )...open it in comp app, and look at it with a LUT....

now, with all this said in mind, what would be your suggestion, as how to treat this images when imported in AE and processed via this elin technology

hopefully, u`ll see this soon...

thx

Marko

Hi Marko! All you need to do with the workflow you describe is apply vid2eLin to your renders and set the gamma to 1.0. Then, with a standard eLin2vid LUT layer (set to the deafult of g2.2) you'll see your image corrected for display. The last step is to set your eLin settings to Cineon Emulation: Off, so that a simple gamma LUT is used. In this way you can be sure your results will match what you saw in Max.

I've got a little question on this if I may - its to do with rolling your own elin in FU..

1) If in my LoaderLUT I apply LogtoLin with Conversion Gamma set to 1.0

2)Have a viwer LUT of 2.2 (or whatever my monitor is set to)

3)Comp in this space

4)In my saverLut put a LintoLog with the same loader settings?

How is this different to eLin?

Hi Stu!

Having used different colorspace workflows, and after reading your stories on the use of eLin on your blog, I was wondering if you could ellaborate a bit more on the topic "comping in real linear space makes tools behave in a more natural way, except for some tools".

Let me explain my point. I do see the benefits within image processing tools that require "averaging" pixels, where the maths on a linear space behave more naturally than on a gamma=corrected space. But on my experience, color correction tools have a weird behaviour on linear images. Actually, I've found that only "mult" or brightness color corrections do behave more naturally on linear than they do on gamma-corrected space.

On the other hand, if you try to apply any correction involving changing the curve ono the blacks side, like a contrast, or even a gamma, the results are better when working on a gamma-corrected space. Same applies to pulling a key from a green/blue screen.

I have often found myself using different colorspaces depending on my needs, which is safe if you know what you're doing at each stage, but I was wondering if that's what you meant when you say that linear feels more organic to the use of compositing tools with some exceptions... are you referring to any of the issues I described above? It would be great to have some in-depth talk about that.

Thanks for your attention and for the great resource your blog is...

Cheers,

blue.

This is very clear - Thank You .

What you call linear - photometrically linear - is what I call linear . But it seems most books call the perceptually encode image that "looks right" linear .

I have you read your other blogs about the proper color space to operations and linear is supposed to be used for blurs , image transformations ,and anything that simulates real light .

It seems to be that the "linear" space that these operations should be calculated in are photometrically linear space . Is this correct ?

What are the consequences if these operations are done in the perceptually encoded "linear" space ? I imagine you will have similar problems that you have when doing these operations in log space .

When I say "linear," I always mean linear-light. So, "yes" to everything you asked.

These macros provided on this page are for old Fusion 4. Is there a chance that you could come up with a fast fix for Fusion 5 architecture Stu?

Thanks for very informative page! It's explaining many issues clearly than many other pages where after reading an article I got more questions than solutions :)

I figured it is pretty easy job to slap them together quickly.

But now I got new question, regarding that page here:

http://mysite.verizon.net/spitzak/conversion/cineon.html

is it correct that your set of macros are not doing that kind of calculation?

Hey Stu,

Love the site - really useful information that is helping me become slightly less confused.

Am hopefully finishing a promo to HD shortly, and will be being given DPX (log) files to conform, and then grading in an Online system before outputting to HDCAM-SR. We do not intend to go back out to film, so I do not need to preserve the log colour space.

Would we need to convert to linear space use some kind of linear to video LUT and do all comping and colour work there before exporting to video?

Or could we just bypass LUTs and work in the system's native video colour space?

If you have time to reply even just briefly, I'd be most grateful!

Best wishes,

G.

Hi Gwyn,

Short answer: Check with the people doing the online. There are a million ways to do this stuff but the right one is the one that works for you and your post house.

I'm absolutely loving your blog. I've learned a ton. I've followed you on your posts up until "floating point." I understand the linear vs. log vs. vid idea, but what does it mean to be in a "floating point" space, exactly?